Perception-Aware Appearance Fabrication

(FWF Lise Meitner M3319)01.01.2022 - 31.12.2023

Wouldn’t it be amazing to be able to mirror how an object looks? We could create appealing products, preserve cultural heritage, or create prosthetics undistinguishable from genuine body parts. One of the options to fabricate such objects is 3D printing. 3D printers are good at reproducing complex shapes, and some even print with multiple colors. Unfortunately, accurately reproducing the hue, saturation, and gloss does not work well yet. The main hurdle for printing objects with desired looks is how do we evaluate how an object looks like? We have devices that can measure individual attributes of an object. We can measure either object’s shape, color, or gloss. However, when we see an object, we do not observe these attributes separately. Instead, we see the entire object at once, and the shape, color, and gloss combine into a single feeling. Currently, it is unknown how this combination is done and how individual attributes play together. Therefore, the main objective of this work is to investigate how humans perceive the difference in looks and how we can leverage these insights in manufacturing.

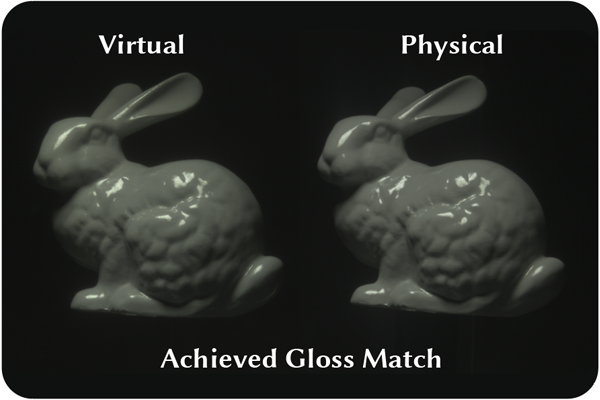

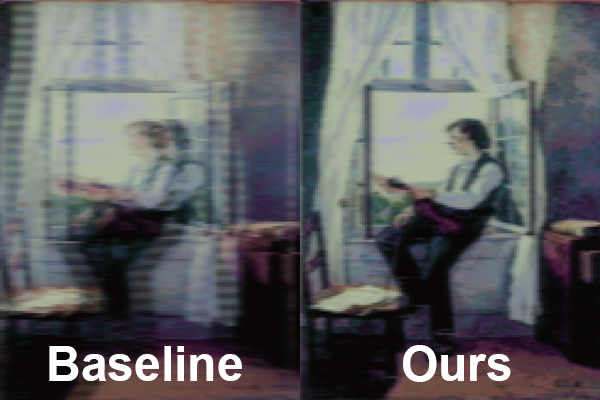

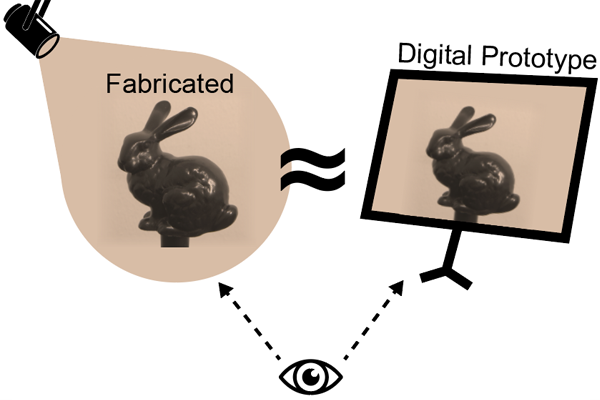

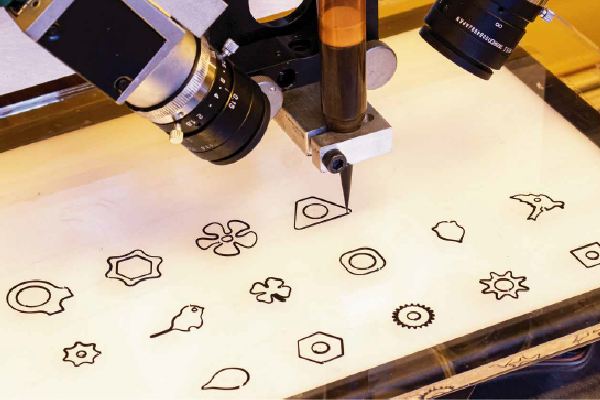

To examine human perception of gloss we designed a display that could produce images so realistic that they were indistinguishable from real objects. With the display we could turn on and off individual components of gloss. We found that the key attribute to match virtual and real objects is accurate reproduction of the dynamic range, i.e. matching the strong highlights and the deep blacks of the real world. With these findings we designed a study that investigated the joined perception of color and gloss. The study revealed a simple, yet intuitive effect. The higher the gloss, the more saturated the color. We combined these insights into a single color-gloss management tool. Our tool automatically handless the saturation change between glossy finishes and produces a single consistent appearance. To deploy our algorithm we also require a manufacturing technology. We proposed a novel tattooing-based system that applies inks in silicon media with needle injections. The system is capable of accurately reproducing human skin tones and creating silicon prosthetics that mimic genuine body parts. To achieve such high fidelity with output we need fine control of the fabrication process. To this end, we developed a self-adjusting printing technology. We first create a digital twin of the printing device on a computer. By interacting with the digital printer a computer algorithm learns effective control strategies that can be applied to the real physical device. We showcased our full pipeline from design tool to production by manufacturing several prototypes from colored printouts to tattooed skin-like silicone sheets.

Publications

Bin Chen, Akshay Jindal, Michal Piovarci, Chao Wang, Hans-Peter Seidel, Piotr Didyk, Karol Myszkowski, Ana Serrano, Rafal K. Mantiuk

SIGGRAPH ASIA 2023

Michal Piovarci, Alexandre Chapiro, Bernd Bickel

SIGGRAPH 2023

Jorge Condor, Michal Piovarci, Bernd Bickel, Piotr Didyk

SIGGRAPH 2023

Kang Liao*, Thibault Tricard*, Michal Piovarci, Hans-Peter Seidel, Vahid Babaei

ICRA 2023

Liu Zhenyuan, Michal Piovarci, Christian Hafner, Raphael Charrondiere, Bernd Bickel

Eurographics 2023

Bin Chen, Michal Piovarci, Chao Wang, Hans-Peter Seidel, Piotr Didyk, Karol Myszkowski, Ana Serrano

SIGGRAPH ASIA 2022

Michal Piovarci*, Michael Foshey*, Jie Xu, Timothy Erps, Vahid Babaei, Piotr Didyk, Szymon Rusinkiewicz, Wojciech Matusik, Bernd Bickel

SIGGRAPH 2022