Gloss management for consistent reproduction of real and virtual objects

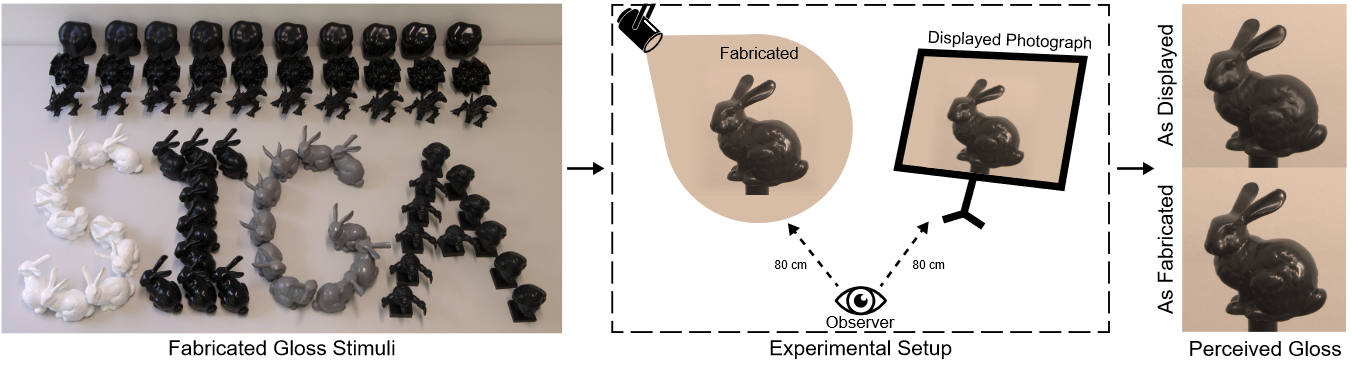

A good match of material appearance between real-world objects and their digital on-screen representations is critical for many applications such as fabrication, design, and e-commerce. However, faithful appearance reproduction is challenging, especially for complex phenomena, such as gloss. In most cases, the view-dependent nature of gloss and the range of luminance values required for reproducing glossy materials exceeds the current capabilities of display devices. As a result, appearance reproduction poses significant problems even with accurately rendered images. This paper studies the gap between the gloss perceived from real-world objects and their digital counterparts. Based on our psychophysical experiments on a wide range of 3D printed samples and their corresponding photographs, we derive insights on the influence of geometry, illumination, and the display's brightness and measure the change in gloss appearance due to the display limitations. Our evaluation experiments demonstrate that using the prediction to correct material parameters in a rendering system improves the match of gloss appearance between real objects and their visualization on a display device.

Downloads

Citation

Bin Chen, Michal Piovarči, Chao Wang, Hans-Peter Seidel, Piotr Didyk, Karol Myszkowski, Ana Serrano, Gloss management for consistent reproduction of real and virtual objects, The Visual Computer volume 37, pages 2975–2987 (2021)@inproceedings{Chen2022,

author = { Bin Chen and Michal Piovar\v{c}i and Chao Wang and Hans-Peter Seidel and Piotr Didyk and Karol Myszkowski and Ana Serrano},

title = {Gloss management for consistent reproduction of real and virtual objects},

booktitle = {The Visual Computer},

volume = {37},

year = {2022},

}